Apple on Tuesday shared a handful or accessibility updates that reached its product range, which are designed to help with everything, from the reading text to follow real -time subtitles and reduce dizziness. The announcement is presented in front of the global accessibility consciousness on May 15, with the characteristics scheduled for its launch at the end of this year.

The technological giant is preparing for its annual conference of world developers on June 9, a duration of what is expected to share software updates on its platforms, including what you have reserved for iOS 19. It is also likely that you share the Somple updates, spectal updates, current somes, species, load your phones with AI functions. Many of those characteristics promoted by AI have also been over -theimized accessibility capabilities on devices such as the iPhone and the Pixel phone.

“In Apple, accessibility is part of our DNA,” said Apple CEO, Tim Cook, in a statement. “Making technology for all is a priority for all of us, and we are proud of the innovations we share this year. That includes tools to help people access crucial information, explore the world around them and do what they love.”

Apple’s accessibility updates will arrive in iPhone, iPad, Mac, Apple Watch and Apple Vision Pro. This is what will soon be available on those devices.

Accessibility Nutrition Tags

Accessibility nutrition labels will show which App Store’s games and applications have the compatible characteristics you need.

In the App Store, a new section in the products pages of the applications and the games will highlight the accessibility functions, so you can immediately know if the capabilities you need are included before downloading. These characteristics include voiceover, voice control, larger text, sufficient contrast, reduced movement and subtitles, as well as others.

Accessibility nutrition labels will be available worldwide in the App Store. Developers will have guidance access to what criteria applications should meet before showing accessibility information in their product pages.

MAC in Mac

The magnifying glass is a tool that allows blind or low vision people to approach, read text and detect objects around them on the iPhone or iPad. Now, the characteristic is also reaching the MAC.

The magnifying glass in Mac connects to a camera, like that of its iPhone, so that it can expand what surrounds you, like a screen or board. You can use the continuity chamber on the iPhone to link it to your MAC or opt for a USB connection to a camera. The function admits reading documents with desktop view. You can adjust what is on your screen, including brightness, contrast and color filters, so that it is easy to see text and images.

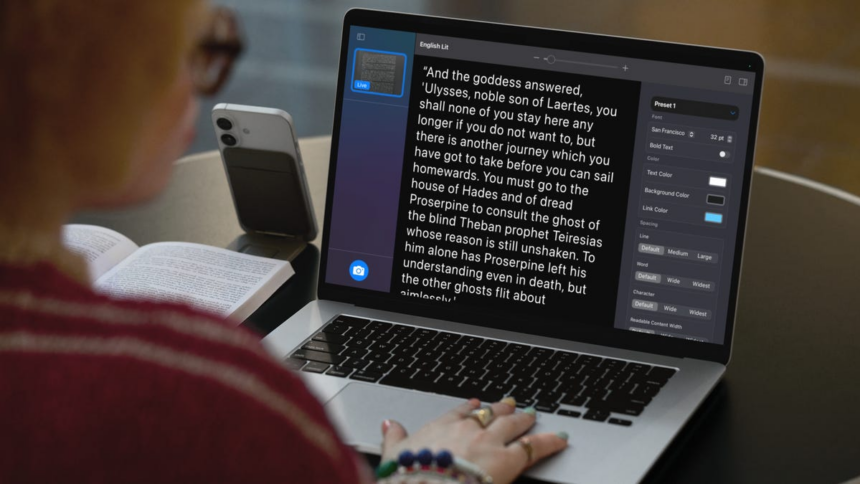

Accessibility reader

This new reading fashion on iPhone, iPad, Mac and Vision Pro is to make the text easier to read for people with a variety of disabilities, including those with dyslexia or low vision. The accessibility reader allows you to customize the text and perfect what you are reading adapting the source, color and space. It is also compatible with the content, so your device can read aloud what is on the screen.

The accessibility reader can be used within any application, and is integrated into the magnifying glass in iOS, iPados and Macos. You can start the function to interact with real world text as in menus and books.

Access to Braille

Braille Access allows you to essentially convert the iPhone, iPad, Mac or Vision Pro into a Braille note. You can start any application by writing with Braille screen entry or a linked Braille device, then write down braille format and calculations use Nemeth Braille.

You can also open Braille Ready format files within Braille Access, allowing them to access books and files that were created in a Braille Note Taking device.

Live subtitles in Apple Watch

Live Listing and Live Subtitles will show real -time text messages in their Apple Watch and will allow you to remotely control a live live session on your iPhone.

Live Listing is a characteristic that takes audio captured by an iPhone and takes it to its compatible airpods, rhythms or headphones, essentially turning your phone into a remote microphone. Now, that feature comes to Apple Watch, along with live subtitles to show real -time text of what is heard through an iPhone. That way, people can listen to the audio when they see them live in their Apple Watch.

You can also use your Apple Watch as a remote control to start or finish live, as well as to jump if something was lost. That means he won to get up in the middle of the class or in a meeting to grab or control his iPhone; You can do it in the room from your watch. Live Listing can also be used with the Airpods Pro 2 closing help function.

Vision Accessibility at Apple Vision Pro

The Apple Vision Pro is adding a handful of characteristics for blind people or have low vision. An update of Zoom will allow you to intervene in anything in your environment using the main vision chamber Pro. Live recognition will describe what is around you, identify objects and read documents using Voiceover.

A new API for developers will also allow approved applications to access the main chamber of the headset, so that it can obtain live visual interpretation assistance in applications such as Be My Eyes.

Other accessibility updates

Apple shared a handful of other updates that reach its accessibility characteristics, including the addition of vehicle movement signals, which can help reduce the movement frame by looking at a screen, Mac can also customize the animated points that appear on iPhone, iPad and Mac.

The personal voice allows people to run the risk of speech loss create a voice that sounds like the use of AI and automatic learning on the device. Now it is faster and easier to use. Instead of reading 150 phrases to configure the function and wait during the night to be processed, the personal voice can now create a more natural voice with only 10 phrases recorded in less than a minute. Apple is also adding support for Spanish in Mexico.

The name recognition will alert it if your name is called.

Similar to ocular monitoring, which allows you to control your iPhone and iPad using only your eyes, head tracking will also allow you to navigate and control your device with head movements.

You can now customize musical haptics on iPhone, which reproduces a series of cones, textures and vibrations next to the audio in Apple Music. You can choose to experience those haptics for the whole song or only for voice, and you can also adjust the general intensity.

Sound recognition, which warns deaf or hard or listened to sound like sirens, bells or car speakers, adding recognition of name so that they can also know when their name is called.

Live subtitles are also adding support to languages in more parts of the world, including English (India, Australia, the United Kingdom, Singapore), Mandarin Chinese (Continental China), Cantonese (Continental China, Hong Kong), France (Latin America, Germany) (Germany) and Korean.